SR-IOV NIC Partitioning

Tags:

#SR-IOV

#networking

Verified

Tested on Dell PowerEdge R730 with Intel X710 10G using Red Hat Enterprise Linux 7 with QEMU-KVM

SR-IOV

Single-root input/output virtualization ^[SR-IOV Intel network spec], refers to the capability of splitting a single PCI hardware resource into multiple virtual PCI resources. Devices like nVME, Network Interfaces, and GPUs may use this capability to use the physical hardware according to the required use case.

A physical PCI device is referred to as a PF(Physical Function), and a virtual PCI device is a VF(Virtual Function).

An excellent overview of SR-IOV can be found in Scott Lowe’s blog post.

SR-IOV Network Interfaces

General

In networking, a capable SR-IOV NIC is used to split a single physical NIC into multiple vNICs, which can be used on the bare metal host or as part of virtual guest instances.

Possible Use Cases

Note

Remember that SR-IOV VFs reside on a physical NIC, which may be a single point of failure if your network topology is not designed properly.

Due to SR-IOV robustness, many network topologies can be achieved with minimal NICs, which will require less cabling and maintenance.

With a single 10GB/40GB/100GB SR-IOV NIC, we could build a setup that offers both redundancy and performance while taking minimal physical space.

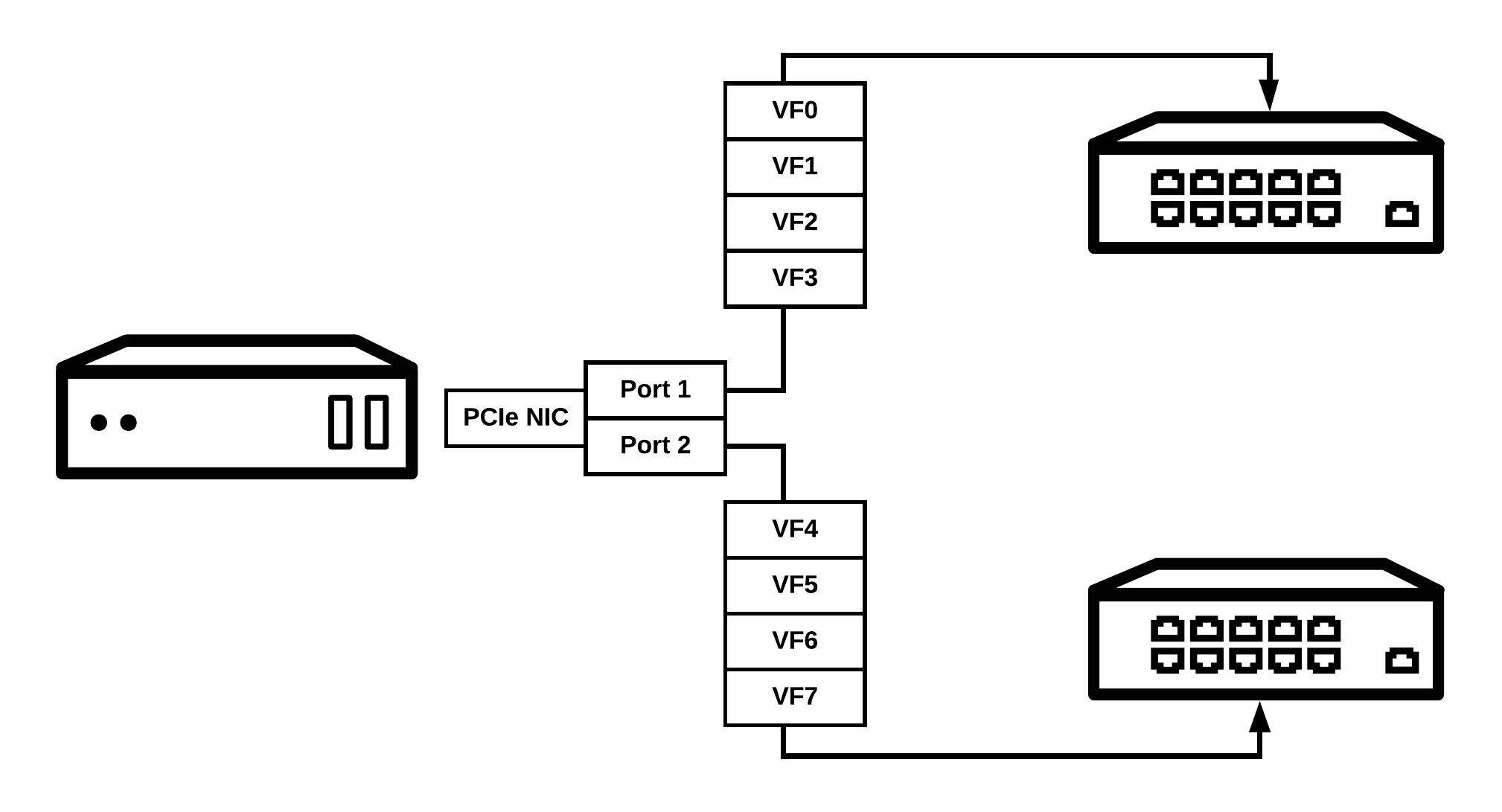

The diagram above shows a bare metal host containing a NIC with two ports connected to different switches.

Each port, represented as a NIC inside the operating system, is split into several vNICs(VFs), which are also represented as a NIC.

VFs can be leveraged to configure networking. For example, in a cloud environment, multiple networks representing different components of the cloud can reside on VFs instead of separate physical NICs. A QoS setting can be applied if some networks require more bandwidth than the rest.

Enabling SR-IOV

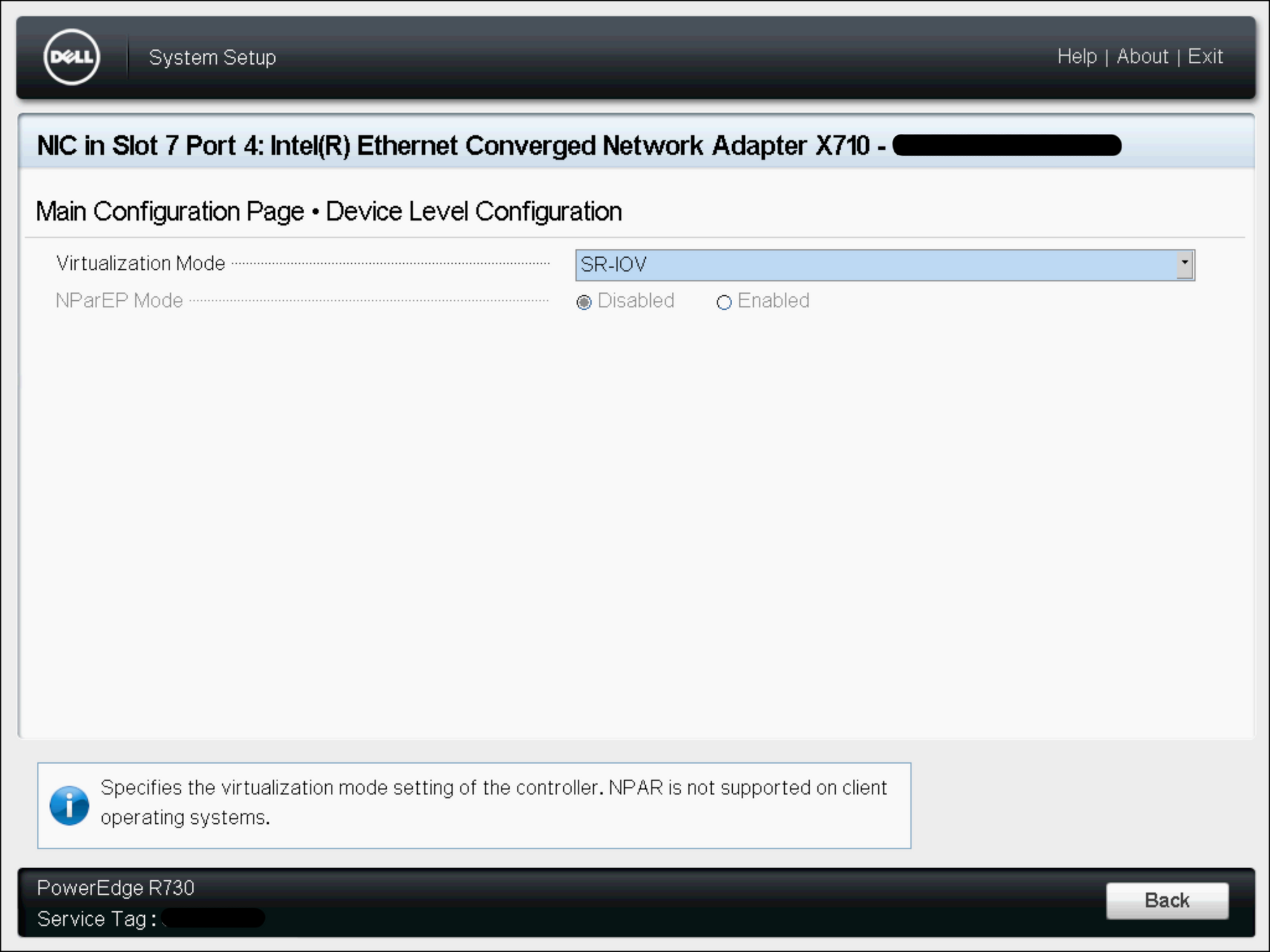

Bios Configuration

Note

Bios settings may differ depending on Bios and NIC vendors. Refer to the vendor's documentation.

On supported NICs, a Bios option must be set:

Operating System

Note

Operating System settings may differ based on NIC vendor and operating system distribution. Refer to the vendor’s/distribution documentation.

Once SR-IOV has been enabled in Bios, verify this:

-

Kernel drivers are loaded for your NIC:

lsmod | grep i40e i40evf 103843 0 i40e 354807 0If drivers are not loaded, load them:

modprobe i40e i40evf -

Discover the PCI slot of the NIC

lshw -c network -businfo # Output Bus info Device Class Description ======================================================== ...output truncated... pci@0000:82:00.3 p4p4 network Ethernet Controller X710 for 10GbE SFP+ -

View the total amount of SR-IOV VFs exposed by PF:

cat /sys/class/net/p4p4/device/sriov_totalvfs # Output 32 -

View the amount of SR-IOV VFs active:

cat /sys/class/net/p4p4/device/sriov_numvfs # Output 0 -

Set X amount of SR-IOV VFs (VFs start from 0, must be

sriov_totalvfs - 1):echo "4" > /sys/class/net/p4p4/device/sriov_numvfsTo reset the SR-IOV VFs:

echo "0" > /sys/class/net/p4p4/device/sriov_numvfs -

Verify that SR-IOV VFs were created:

# View configured amount of virtual functions cat /sys/class/net/p4p4/device/sriov_numvfs # Output 4 # View physical interface ip link show p4p4 # Output 13: p4p4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000 link/ether 3c:fd:fe:31:1f:c6 brd ff:ff:ff:ff:ff:ff vf 0 MAC 00:00:00:00:00:00, spoof checking on, link-state auto, trust off vf 1 MAC 00:00:00:00:00:00, spoof checking on, link-state auto, trust off vf 2 MAC 00:00:00:00:00:00, spoof checking on, link-state auto, trust off vf 3 MAC 00:00:00:00:00:00, spoof checking on, link-state auto, trust off # View /sys/class/net for interface ll /sys/class/net/p4p4* # Output lrwxrwxrwx. 1 root root 0 May 24 22:30 /sys/class/net/p4p4 -> ../../devices/pci0000:80/0000:80:02.0/0000:82:00.3/net/p4p4 lrwxrwxrwx. 1 root root 0 May 27 18:58 /sys/class/net/p4p4_0 -> ../../devices/pci0000:80/0000:80:02.0/0000:82:0e.0/net/p4p4_0 lrwxrwxrwx. 1 root root 0 May 27 18:58 /sys/class/net/p4p4_1 -> ../../devices/pci0000:80/0000:80:02.0/0000:82:0e.1/net/p4p4_1 lrwxrwxrwx. 1 root root 0 May 27 18:58 /sys/class/net/p4p4_2 -> ../../devices/pci0000:80/0000:80:02.0/0000:82:0e.2/net/p4p4_2 lrwxrwxrwx. 1 root root 0 May 27 18:58 /sys/class/net/p4p4_3 -> ../../devices/pci0000:80/0000:80:02.0/0000:82:0e.3/net/p4p4_3

Leveraging SR-IOV VFs

Once SR-IOV has been configured, we can use them as NICs or pass them to VMs for increased performance.

VFs As NICs

Since VFs are represented as NICs, we can use native Linux tools such as ip, ifconfig, nmcli, network scripts, and others to configure the network.

Make sure your SR-IOV PF device settings are empty before using VFs:

ifconfig p4p4

# Output

p4p4: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::3efd:feff:fe33:a8a6 prefixlen 64 scopeid 0x20<link>

ether 3c:fd:fe:33:a8:a6 txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Now you can configure VF. Example of network script config file:

cat /etc/sysconfig/network-scripts/ifcfg-p4p4_1

# Output

DEVICE=p4p4_1

ONBOOT=yes

HOTPLUG=no

NM_CONTROLLED=no

PEERDNS=no

BOOTPROTO=static

IPADDR=10.20.151.125

NETMASK=255.255.255.0

Start NIC and verify that settings were applied:

# Bring interface online

ifup p4p4_1

# View interface

ifconfig p4p4_1

# Output

p4p4_1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

it 10.20.151.125 netmask 255.255.255.0 broadcast 10.20.151.255

inet6 fe80::c8d9:10ff:fe01:8488 prefixlen 64 scopeid 0x20<link>

ether ca:d9:10:01:84:88 txqueuelen 1000 (Ethernet)

RX packets 2430103 bytes 320710952 (305.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2369103 bytes 433952302 (413.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

VFs For VMs

Note

PCI Passthrough and more in-depth topics are out of the scope of this blog post.

Note

Requires AMD-Vi or Intel VT-d to be enabled on the hypervisor host.

Note

Refer to your hypervisor's documentation regarding SR-IOV..

On supported hypervisors, SR-IOV allows VMs to access the PCI directly, which increases performance compared to using generic vNICs provided by your hypervisor.

Refer to Red Hat Enterprise Linux 7 guide to boot up an instance with SR-IOV VFs using KVM.